Bots are getting scary

-

From a layman's perspective, does not electronic communication rely on electric pulses perfectly following their paths with the precise voltage and duration? And considering the physical size of the hardware, is it possible that electronic pulses can, due to extreme miniaturization, sometimes follow a path not intended by its designers, creating unexpected errors which can be difficult to trace or replicate, yet nevertheless cause a program to function abnormally?

-

I dont disagree with you in principle but the issue is, more than half the computers in the world are carrying errors many are carrying several errors many of which may take years to appear.

The argument here that we are discussing is that the computer will always obey the program that the human has inputted because thats what computers do. They execute lines of code as programmed.

Except they dont, that is a wrong statement. They simply appear to execute lines of code correctly for most of the time.

Never in the history of Intel computing have computers ever executed the code the programmer inputted and compiled.

The computer makes a copy of the code in RAM memory and executes that copy. It is fairly common for there to be a corruption in that copy, so you have a fail right there.

The truth is even if the copy is a good one, the computer does not execute the program correctly every time due to other errors that exist elsewhere.

And the computer cannot spot most errors it has to rely on checksums which dont reveal much and errors can cancel out in checksums.

There are several places where corruption of the program is likely to occur.

I can name several kinds of errors and in every case the program the human inputted is not followed by the computer.

FYI I worked as a second line support and a third line technical support engineer with Hewlett Packard working in Intel products SUN systems, coms and telecoms.

I designed and built several servers, designed and built a clustered supercomputer on Linux, I programmed distribution software for Hewlett Packard, I acted as an advisor to technical support companies and worked with Radio Networking companies.

I can tell you now computers do not always execute programs correctly due to fundamental issues in the way they are constructed configured and operate.

Check out Real Time computing and the ADA system

Ada is a strongly typed programming language that enjoys widespread use within the embedded systems and safety-critical software industry.

ADA solves some of these issues that plague intel systems

Computers were too unreliable for use on the Moon shot and NASA had to commission a new real time system that guaranteed that their computer would execute code correctly. NASA invented real time computing.

They would not have had to do that if computers executed code reliably and correctly but they didnt and still dont.

The only systems that do execute code correctly are real time systems, these are mission critical systems like air traffic control systems where lives are at risk or lost if code is not executed correctly.

I do know what I am talking about here.

Why do you think the most common fix for a computer is and always has been "turn it off and on again", it is because they often dont function correctly and they dont execute code correctly and turning them off and on again refreshes the OP sys the RAM images etc etc etc, for a few hours until the next error hits.

So does a computer execute code exactly how it was told to do in the code.

No - no way. Sometimes they do sometimes they dont.

-

excellent post you are absolutely right

Put simply, a cmos logic gate is in essence a flip flop based upon a 3.5 volt threshold

The problem is there is no such thing as a logic circuit outputting logic 1 or logic 0.

There are instead analogue circuits that output a range of voltages that we can force to behave like logic circuits.

If the voltage output of the logic circuit or gate is higher than 3.5 volt it is a logic 1 if it is less than 3.5 volts it is a logic 0. 3.5 volts has been adopted in CMOS as the threshold for logic 1

CMOS gate values are supposed to be either 0 volts or 5 volts for those logic states, but they rarely are because they are analogue circuits trying to behave like digital circuits, so we use threshold values to detect the logic states.

In realty there could be any value between those voltages and given losses in the circuits a logic 1 of above 3.5 volts could be pulled down below 3.5 volts due to a voltage sink of some kind and become a logic 0.

Then the logic has changed when it should not have changed and the computer program has then malfunctioned.

The logic state should really be unknown if the voltage in the gate output voltage is less than the 3.5 volt threshold set for logic 1, but almost 3.5 volts.

When does a 0 become a 1 at what voltage 3.50 3.49. 3.48

What happens if a gate output is at the 3.5 volt threshold and rippling slightly between 3.4 volts and 3.6 volts.

This is the computer logic equivalent of panicking.

The detection of 3.5 volts or more causes downstream logic gates to flip due to logic 1 being detected but less than 3.5 volts causes the downstream logic gate to a flop to logic 0

There is a period of indecision due to logic gate ripple where the gates are all in an indeterminate state during the ripple and they take a period of time to settle into the final state of correct logic.

The larger the number of gates the longer it takes for the ripple through and to settle

This period of settling in which the logic gates are performing illogically grows as circuits are miniaturised and with greater miniaturisation comes greater difficulty of determining logic 1 in any single gate.

The hope is of course that all logic gates will settle correctly to the correct logic value however as you quite rightly point out losses due to miniaturisation plus abnormalities can disrupt the logic gate performance.

We cannot wait forever for logic circuits to settle so we make assumptions that within a set period of time all rippling will have ceased and logic circuits will have reached the correct values.

A typical 4 logic gate cluster takes 300 ps to settle, we need not explore the ramifications of this, it is not much time, 300 trillionths of a second, but compounded by the sheer total number of logic gates in a system means that it actually can take a significant amount of time for all rippling to cease in all the gates.

This is one of the limiting factors on the growth of computer systems

After rippling has ceased we then read the output of all the gates allowing time for all rippling to end plus x, - a safety margin time. We cannot risk reading a logic gate output if it is still rippling.

But what happens when a passing neutrino enters a logic gate and trips a gate from logic 1 to logic 0 during the safety margin wait state.

Rippling must begin again. This passing neutrino could also by the way on the hard drive stab a binary value in a stored program changing the program forever and preventing the computer from obeying the designed code, because the code has now changed and is no longer as programmed.

We are bombarded by Neutrinos every day and most pass through us harmlessly but when a neutron interacts with the semiconductor material, it deposits charge, which can change the binary state of the bit and Neutrinos can only be stopped by lead or concrete so computers are vulnerable to them in stored programs in RAM in ROM and in the data carried on the bus.

We may then finish up reading the logic output during a new rippling state caused by a neutrino attack. We then have a 50 50 gamble that the logic is in error.

Exactly as you describe and pointed out J.Jericho.

And exactly as you suggest greater miniaturisation makes this effect more likely as gates miniaturise more, but neutrinos do not miniaturise and their effects could become more profound for logic circuits as we miniaturise more over time.

The more logic gates you have the more opportunity there is for Neutrino disruption.

This is a very long post I know but we are deep diving here into complex areas that usually are hidden from the general public and largely unknown to them.

The present state of miniaturisation of logic is called VLSI very large scale integration and it brings issues that are difficult to address relating to robustness and reliability of these very large scale integrated circuits.

I am comfortable J.Jericho that you already know all of this and more besides, possibly more than I do on this topic given your excellent posts.

We are in examining this topic very close to the cutting edge of computing and its far reaching implications for the future of computer systems as we plod ever onwards down the road of greater miniaturisation and with greater miniaturisation comes greater risk of failures due to the very miniaturisation that we seek.

I intended this post to illustrate exactly why your post is technically correct and on topic. I do not intend to slight other members very few people understand this stuff, and we can only know what we know.

Most members know lots of stuff I do not know and cannot hope to know and I want to make it quite clear that not knowing this deeply technical and difficult subject is no reflection on them at all.

Moores law says computer gates double in complexity every 2 years. I would suggest that Risk doubles along with that.

I am not at all surprised that most people do not know all this it is the province of micro electronics engineers largely hidden from the public and there is no need for the public to know these things only electronics engineers chip designers and systems designers need to know this stuff.

What does surprise me on a daily basis is how reliable computers are given their huge vulnerability to errors and mishap.

Computers seem to me to fly less like an F15, and more like a bumble bee, they just manage to get there despite being a bit poor at flying.

-

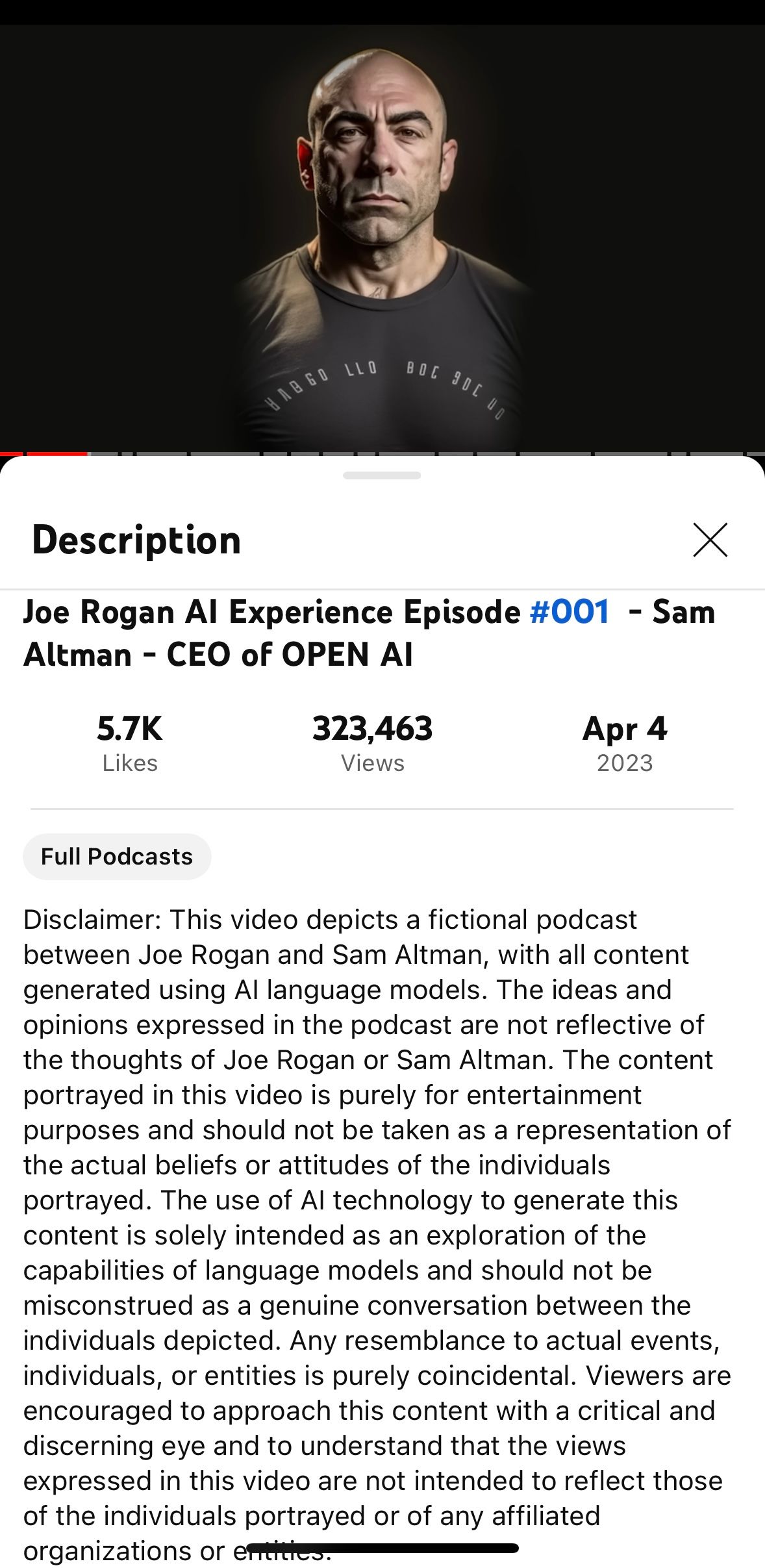

This is not an example of AI destroying the world, but nevertheless is significant. It is a totally AI created pod cast of Joe Rogan interviewing Sam Altman, CEO of Open AI. There is no participation of either person and it is a “Deep Fake”, all generated by AI. The implications are endless.

-

@j-jericho said in Bots are getting scary:

From a layman's perspective, does not electronic communication rely on electric pulses perfectly following their paths with the precise voltage and duration? And considering the physical size of the hardware, is it possible that electronic pulses can, due to extreme miniaturization, sometimes follow a path not intended by its designers, creating unexpected errors which can be difficult to trace or replicate, yet nevertheless cause a program to function abnormally?

Yes, it is possible. It happens from time to time that a bit "flips" in a memory chip, causing a 1 to become a 0 or vice versa. It's usually attributed to cosmic radiation.

Another possibility is that a bit becomes flipped in transit, during a network transmission, by randomly interjected electrical fluctuations in wiring, or cosmic interference with the transmitting or receiving chip set.

The good part is, most of these occurrences will either:

- (best case) cause an error or exception to be thrown in the running program, because the corrupted data is no longer to be interpreted, or if it was program code that got corrupted, the resulting instruction is invalid. Normal computers will fail in a "loud" way if this happens, and normal program error handling should ensure that nobody gets killed as a result. Program crash is a common symptom.

- cause a computation to continue executing normally but with incorrect data. In a fault-tolerant system such as aeronautics, this can be discovered and corrected for, by doing the same calculations in redundant systems. Normal computers will not find or correct for this type of error.,

There are statistics from the big cloud computing players on how common bit flips are. IIRC, you won't see flips daily or weekly per computer, but you can have multiple memory bit flip incidents during the multi-year life of an average computer. Due to good modern programming practices, these very rarely become a problem to the user.

Error correction techniques are employed to try to detect or correct bit errors. In communications, bit errors are fairly common and the communications protocols will contain contingencies like check-sums and automatic re-transmission of failed packets.

Memory in consumer hardware doesn't have error correction codes (ECC) but more expensive server hardware generally does. Anecdotes abound but I've heard that servers with big memories will register a couple of correctable events a week, which the ECC memory handles automatically.

-

@jolter Many thanks for your clear, comprehensive, concise, easy-to-understand explanation!

-

I agree with J.Jericho your post is exceptionally well presented accurate and clear.

I would add however that while you are quite correct when you say that Memory in consumer hardware doesn't have error correction codes (ECC) but more expensive server hardware generally does, - we do however end up with error detection and correction due to the OSI 7 layers and the way it is implemented.

Typically hardware and software manufacturers include error checking at the OSI boundary their equipment communicates across.

The end result is error checking of the function of the consumer device by the back door.

This can and sometimes does lead to excessive and repeated error checking.

For example when sending information from the application layer in source machine 1 across a comms link to the application layer on destination machine 2, the data traverses 14 OSI boundaries so if we error check at every boundary transition, we error check the same data 14 times.

While in the classroom and the lab, error checking is mandated and always held to be a good thing, in the real world this excessive error checking has been known to kill the data transfer and cause catastrophic failures.

I have personal experience of this.

-

@trumpetb said in Bots are getting scary:

I agree with J.Jericho your post is exceptionally well presented accurate and clear.

I would add however that while you are quite correct when you say that Memory in consumer hardware doesn't have error correction codes (ECC) but more expensive server hardware generally does, - we do however end up with error detection and correction due to the OSI 7 layers and the way it is implemented.

Typically hardware and software manufacturers include error checking at the OSI boundary their equipment communicates across.

The end result is error checking of the function of the consumer device by the back door.

You’re overcomplicating things, as usual. There are always more details that we could add to any answer, but sometimes brevity is the key to getting the audience’s understanding.

Of course, the error correction of the various network layers was what I was getting at when I wrote about network retransmission. I just glossed over some details.

One more thing, the OSI model is not implemented by any of the major OSs in the market today. Windows, Linux and Mac OS each only implements the bottom four layers, in the form of the TCP/IP stack. I don’t know if the OSI model was ever fully realized, even in the old Unixen. So better update your knowledge, it’s only been four levels down and four up, since the Internet came about.

-

you are turning a discussion into an argument again.

Whether you like it or not the fact is the 7 layers exist, from level 7 the application layer that we interact with, to layer 1 the physical layer the lowest hardware level that is implemented for interconnectivity.

The internet is not the only game in town, there is a variety of different connectivity that is not internet based, we are talking radio based, defence based, high security applications, small bespoke implementations that avoid the internet.

NASA for example does not use the internet to communicate with devices in orbit. The Navy does not use the internet to communicate with ships at sea.

And in these lesser observed areas manufacturers implement the 7 layers for safety, data integrity, and security.

As for updating my knowledge I dont need to, I have worked at all levels in support of pc's, peripherals, servers, server farms, clusters, - Individuals, Companies, Corporations, Defence organisations, Banks, Hospitals, Government, the Armed forces.

The majority of my work was internet based and companies often cut corners there, but the internet was only one small part of the entire mix of connectivities I was responsible for supporting.

I do not wish to turn this into a fight, I was not correcting you I was praising your knowledge and contributing.

You seem very defensive however.

I was not questioning your knowledge why are you questioning mine, particularly when you make assertions that conflict with my hard won experience in industry working at all levels. And I have a significant amount of experience from the very highest level to the lowest.

I was simply pointing out that the OSI layers exist in the same way that laws exist. Not all manufacturers comply with the OSI and not all people comply with the law, but people should comply with the law and companies should comply with OSI.

But where they do comply with OSI and where they do comply with good practice, they apply error checking when transporting data between layers. This cannot be denied. If you were to deny this I would be forced to question your knowledge and experience.

It could be the case that you are very knowledgeable academically but educationalists do not know everything and in the real world academic knowledge sometimes falls short.

Error checking between OSI layers exists, it has not disappeared simply because it is not always applied by manufacturers who chose not to implement it.

Spanning of course exists where devices span layers error checking between layers is then unnecessary.

And simply because many manufacturers chose not to implement it does not mean we should not implement it. We should not abandon laws just because lots of people ignore them.

Now for a real world example of implementation of the OSI 7 layers and implementation of error checking that destroyed a businesses ability to operate.

I was tasked some years ago to resolve a catastrophic failure in a company that prevented any of the companies home workers from connecting and functioning.

The problem turned out to be caused by the error checking in the device at layer 1.

It was finding errors in the data every few seconds and then forcing a disconnect and resend of the data. Nobody could work and the company survival was threatened. This was a tier 1 catastrophic failure and every engineer assigned to it globally had failed to resolve it despite escalations and extensive work on it.

Nobody had the guts to turn off the error checking because it was bad practice to do so as it was mandatory to have error checking at the OSI layer boundary for this equipment.

I had to insist that we break the rules and disable error checking, I got my way because the fault could not be resolved in any other way and the equipment with error checking turned off, performed faultlessly.

I was told YOU CANT TURN ERROR CHECKING OFF everyone was trained to never break this fundamental rule it was a law. This is the difference between academic knowledge and real world knowledge. The world is not black and white sometimes we have to break rules that teachers say must not be broken to get results.

I do believe that you are very knowledgeable but the way you have approached your posts suggests a very detailed academic knowledge that does not always work in the real world.

You are not the only very knowledgeable person in the world. And when I add information to a thread that you have commented on that does not mean that I am questioning or doubting your knowledge or abilities.

As for your assertion that brevity is the key to understanding, I disagree brevity usually means leaving something out.

Technical subjects demand full and complete descriptions and answers or the entire story is not told.

And with the brevity comes lack of knowledge, and this lack of knowledge sometimes causes wrong decision making because we dont have all the facts..

In chat rooms nobody likes walls of text, but in technical descriptions walls of text are required or important information is missing.

The only way forward to escape this issue would be to refuse to speak technically in chat rooms, and that means we just chat pointlessly, I dont want that.

I refuse to miss out pertinent information simply because the reader cannot be bothered to read a full and complete text.

If they cannot be bothered to read a technical description in its entirety the fault lies with the reader and not the author.

Less is not more here, less is less.

-

@trumpetb

Hey, you just gave me an idea. How about I take your advice and never read any of your posts in the future? Then you won’t have to read my replies and I won’t have to parse huge walls of extraneous text.

That should make us both enjoy this forum a lot more.Please don’t bother to respond to this message as you would only be shouting into the void.

-

I pretty much expected that response.

The response of a spoiled child who has read up on a subject, is incapable of an adult conversation on the topic and just wants to "prove" how much he knows.

If you dont like what is written you always have the option to not read it, but in your case you choose instead to try to force the writer to obey you and you ridicule them if they dont do that.

I am happy you have chosen silence for the future, but if you do answer any of my post in an adult manner I will be more than happy to have a dialogue with you.

Until then fare well and good luck

-

@trumpetb I too have an allergy for "long winded" anything. 5 minutes of content taking up an hour or two is actually an insult. So it is with many of your posts - at the core, a line or two of good content (that I do not always agree with but still find it "good" as it makes me think about things) but so much "fluff" that pales in comparison.

I can appreciate having lots of time on hand and using that time on social media. That does not mean that your pleasure transfers to ours.In your case, I simply do not even "parse" anything from you longer than 10 lines or so. The content is simply not worth it.

I do not consider Jolter to be a spoiled child. He agrees or disagrees and keeps his content "compact". He has nothing to prove and never had. I will not comment on what I think about your literary circular breathing.

-

Succinct posts are praiseworthy; they leave more time for us to play our trumpets. Backing down from animosity saves stress; clicking the "Ignore" button in our brains helps enable this and keeps civility predominant on TrumpetBoards and elsewhere.

-

My mistake

These are chat room rules and I dont do chat rooms

I dont need a chat room.

I like to learn new things and that often means lengthy posts.

I was putting lengthy posts in a chat room.

I wish you well with your chatting however.

-

Something bots lack, from Strunk & White "The Elements of Style:"

"Vigorous writing is concise. A sentence should contain no unnecessary words, a paragraph no unnecessary sentences, for the same reason that a drawing should have no unnecessary lines and a machine no unnecessary parts. This requires not that the writer make all his sentences short, or that he avoid all detail and treat his subjects only in outline, but that every word tell."

-

An interesting article today on AI and ChatGPt- “Europe Sounds The Alarm On ChatGPT”

An excerpt:

“…. that ChatGPT, just one of thousands of AI platforms currently in use, can assist criminals with phishing, malware creation and even terrorist acts.

If a potential criminal knows nothing about a particular crime area, ChatGPT can speed up the research process significantly by offering key information that can then be further explored in subsequent steps,” the Europol report stated. “As such, ChatGPT can be used to learn about a vast number of potential crime areas with no prior knowledge, ranging from how to break into a home to terrorism, cybercrime and child sexual abuse.”The full article link is here:

https://news.yahoo.com/europe-sounds-the-alarm-on-chatgpt-090013543.html -

@ssmith1226 said in Bots are getting scary:

An interesting article today on AI and ChatGPt- “Europe Sounds The Alarm On ChatGPT”

An excerpt:

“…. that ChatGPT, just one of thousands of AI platforms currently in use, can assist criminals with phishing, malware creation and even terrorist acts.

If a potential criminal knows nothing about a particular crime area, ChatGPT can speed up the research process significantly by offering key information that can then be further explored in subsequent steps,” the Europol report stated. “As such, ChatGPT can be used to learn about a vast number of potential crime areas with no prior knowledge, ranging from how to break into a home to terrorism, cybercrime and child sexual abuse.”The full article link is here:

https://news.yahoo.com/europe-sounds-the-alarm-on-chatgpt-090013543.htmlSo guys, post pictures of or messages from vacation AFTER you get back home - not during the act.

Nothing worse than returning home to an empty studio! -

An article from May 8, 2023 New York Magazine indicates that deep fake vocals are of outstanding quality and are getting simple to create. I would imagine that the deep fake virtuoso instrumentalist is not too far behind.

Below is a brief selection and a link to the full article.“AI Singers Are Unnervingly Good and Already Ubiquitous

The software that cloned Drake and the Weeknd is easy to use—and impossible to shut down…

…Two months ago, AI voice-cloning technology barely existed. Now it’s forcing the music industry to consider such tricky questions as whether pop stars own the sounds produced by their own larynges and if we even need flesh-and-blood pop stars at all anymore…”

https://www.vulture.com/article/ai-singers-drake-the-weeknd-voice-clones.html

-

@ssmith1226 What's next... AI Abraham Lincoln singing "Another One Bites The Dust"?

-

Even better